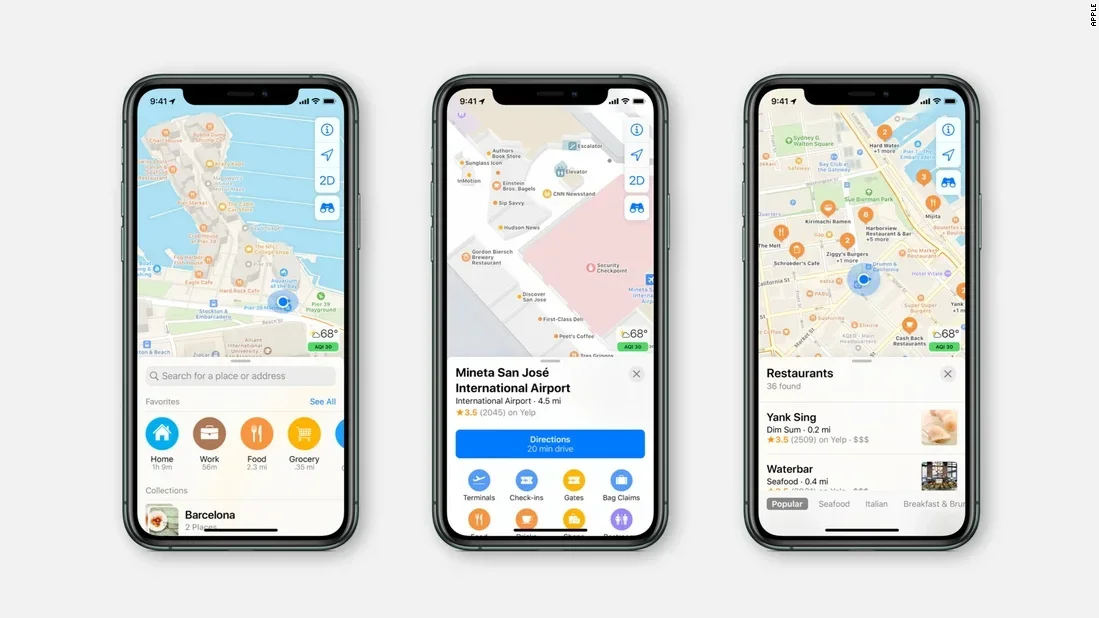

Augmented Reality (AR) places computer generated-images over a real-world view. For example, on Apple Maps you can use AR to get walking directions that are placed over a live shot from a rear camera showing you which way to go. This feature is only available in certain cities and is similar to Live View on Google Maps. Supported devices include the iPhone SE 2 and SE 3, the 2018 iPhone XR, iPhone XS, iPhone XS Max and later models.

Apple is asking for your help to improve the accuracy of its AR features in Apple Maps

Since part of this feature requires your iPhone to figure out your current location, Apple does collect certain data from your phone to improve the accuracy of the AR location data on your handset. In a new support document titled “Help improve Augmented Reality Location Accuracy in Maps,” Apple writes, “To help improve the speed and accuracy of augmented reality features in Maps, you can share data with Apple about your surroundings when you use these features. No photos are shared and Apple collects only the data that we need to make these experiences better.”

Make sure the toggle found in the red box is turned on if you want to improve the accuracy of AR features in Apple Maps.

When raising your iPhone while using AR in Apple Maps for immersive walking directions or to refine your current location, your handset scans your surroundings and detects “feature points” from buildings. These “feature points” use the physical appearance and shapes of buildings and other stationary objects to create data that a person cannot read. Instead, using on-device machine learning, this data is compared to Apple Maps reference data sent to your phone to enable the AR features in Apple Maps.

When you raise your iPhone in the air to perform the scan, things that move like people and vehicles are filtered out leaving feature points for stationary objects. If these feature points move in a way that doesn’t coincide with the movement of your camera, the iPhone assumes that whatever data it collected is not for a stationary object and discards that information.

When you raise your iPhone and it is collecting the “feature points” of stationary items, it compares this information to Apple Maps’ reference data to find your exact location on a map to help you obtain AR walking directions and also refines your location. For this to work, the reference data in Apple Maps that images from your rear camera are compared with need to be updated consistently and this is where you come in.

As Apple explains, “When you agree to share the feature points that your iPhone camera detects with Apple, you help refresh this reference data to improve the speed and accuracy of these augmented reality features.” Apple collects this data by encrypting it while you are on the move, and stores it encrypted when you stop. This data is not associated with you or your Apple ID. As your iPhone scans, it shares only feature points. No photos are sent to Apple.

Apple claims that “Only an extremely sophisticated attacker with access to Apple’s encoding system” would be a problem

Now read this part carefully. Apple claims that “Only an extremely sophisticated attacker with access to Apple’s encoding system would be able to attempt to recreate an image from the feature points. Since the feature point data that you share is encrypted when it leaves your device and only Apple has access to the data collections, such an attack and recreation are extremely unlikely. The additional noise further prevents any attempt to use the feature point data to recreate an image in which people or vehicles may be identified.

Immersive AR walking directions on Apple Maps

If this doesn’t resolve your worries about the security of this process, you can make sure that you are not sharing data to improve the AR location accuracy of Apple Maps by going to Settings > Privacy & Security > Analytics & Improvements; make sure that Improve AR Location Accuracy feature remains toggled off.

On the other hand, while the setting is off by default, if you feel that the data you are providing Apple with is secure and not connected to you at all, you can improve the accuracy of immersive AR walking directions and the refine location features by turning the toggle on. It is up to you.

As previously noted, if you enable the collection of the physical characteristics of the buildings and other stationary items around you, your Apple ID is not attached to the data and no photos are used. The bottom line is that if you use AR walking or refine your location using AR on Apple Maps, you might be helping yourself by helping Apple.